Local AI & What It Means for Gaming?

Apple recently announced Apple Intelligence, their first generative artificial intelligence (AI) offering which brings local large language model (LLM) inference to an array of Apple devices. Inference – for LLMs, all machine learning (ML), or AI models – is the process in which models leverage their training to analyze new data (a query to chatGPT for example) and make predictions (or come up with responses).

Apple is not the first to bring LLM inference to end-user devices; Google has their Tensor G4 chips on Pixel 9 phones, Samsung Exynos chips support local inference, and Qualcomm’s Snapdragon Gen 3 chips offer local inference to multiple manufacturers, for example. However, Apple Intelligence will be supported on devices with M1 or A17 Pro processors and higher, which means this local AI will work on the iPhone 15 Pro (or newer) and certain Mac/iPad models going back to 2020.

This backward compatibility offers a competitive advantage to Apple, who will immediately have a large user base (Apple sold 38.7m iPhones with A17 Pro chips in 1H24 alone) of Apple Intelligence on day one of release. Microsoft, as a comparison, requires new silicon chips to run Copilot+ features locally on PCs (Forbes). Apple was able to do this because they are leveraging their Apple Neural Engine, a type of Neural Processing Unit they have been incorporating in devices since 2017. Neural Processing Units (NPUs), or AI accelerators, are chips specifically designed for AI and ML tasks.

This week, we will look at the evolution of specialized chips for AI and ML applications, the current landscape of innovation and development, and the impact that local inference will have on gaming and other latency-sensitive applications.

Specialized Chips for AI: From GPUs to NPUs

For the past two decades, Graphical Processing Units (GPUs) have been favored for inference (and training) of AI models. GPUs were originally developed for processing graphics, which found a strong market in video games and propelled Nvidia (one of the first GPU chip companies) to initial success. Prior to GPUs, video games and other graphic intensive applications leveraged the Central Processing Unit (CPU), the main processing unit in any computer, for computations.

CPUs are flexible and can handle any task, but they are not specialized. Over time, special purpose accelerators, such as the GPU, were developed to handle specific tasks quickly and more efficiently. Though GPUs were developed for graphics processing, the same computations they focused on (parallel arithmetic operations: the ability to run several calculations or processes simultaneously) turned out to be very good for machine learning training and inference.

As AI models have expanded their volume of data, processing has grown exponentially. GPUs typically have small, fast-access memory on the chip alongside the cores that process data and larger memory off chip, which is slower to access. When AI models are run, they need to store intermediate results to memory as the computation happens, then a final inference result is compiled and returned. The fast-access memory on most standard GPUs is not large enough for most data processing that today's ML models require, and so they must shuttle intermediate results to the off-chip memory, which takes ~2000x longer than accessing on chip memory and uses ~200x as much energy (The Economist). This memory access bottleneck pushed researchers to develop more specialized chips (NPUs) with larger on-chip memory and alternative architectures, making them more efficient for ML tasks.

Though NPUs are better optimized for their specific ML tasks, there is a downside to their specialization: less flexibility. CPUs are the most flexible processors. They can do anything but they are not as fast and efficient at certain tasks, especially large multi-step real-time tasks as they perform sequential rather than parallel processing. Special purpose accelerators achieve their efficiency by tightly integrating with the software they run. If the software changes, these accelerators are not as flexible and will likely become less performant at those new tasks. Though GPUs are specialized, they are still fairly generic for arithmetic calculations that support graphics processing and a wide array of ML model compute. NPUs are much more specialized and architected specifically with the algorithms and software for running specific models in mind.

As we are still early in the generative AI and LLM race, these models will change over time and there are many startups going after the opportunity to supplant Nvidia and the GPU market. What is state of the art today, and potentially most promising from a research perspective, could be entirely different from what is actually adopted one to two years down the road. Producing these specialized chips, and adopting them in devices, carries significant risks of obsolescence. Regardless of the risks, there is a likely future where new chips dedicated to various parts of the AI stack become more efficient and widely adopted; one such area is local inference.

The Impact of NPU Optimization and Local Inference on Gaming

In the future, Jay Goldberg of D2D Advisory, estimates 15% of AI silicon will be for training, 45% for data center inference, and 40% on devices, which we agree with. Depending on the use case, some inference will be in the cloud, some at edge servers closer to end users, and some directly on local devices depending on use case requirements.

When thinking about gaming today, most games render locally, where they have access to local compute (both CPU and GPU) to execute game code. For multiplayer games, there is typically an additional instance of the game running on the cloud that effectively acts as the referee between the various players in a game lobby (making sure everything stays synced correctly). Games that want to leverage AI services today mostly need to go off-device for inference. For game design and development, this does not matter as it is done before games (or updates) are released and latency is not a concern.

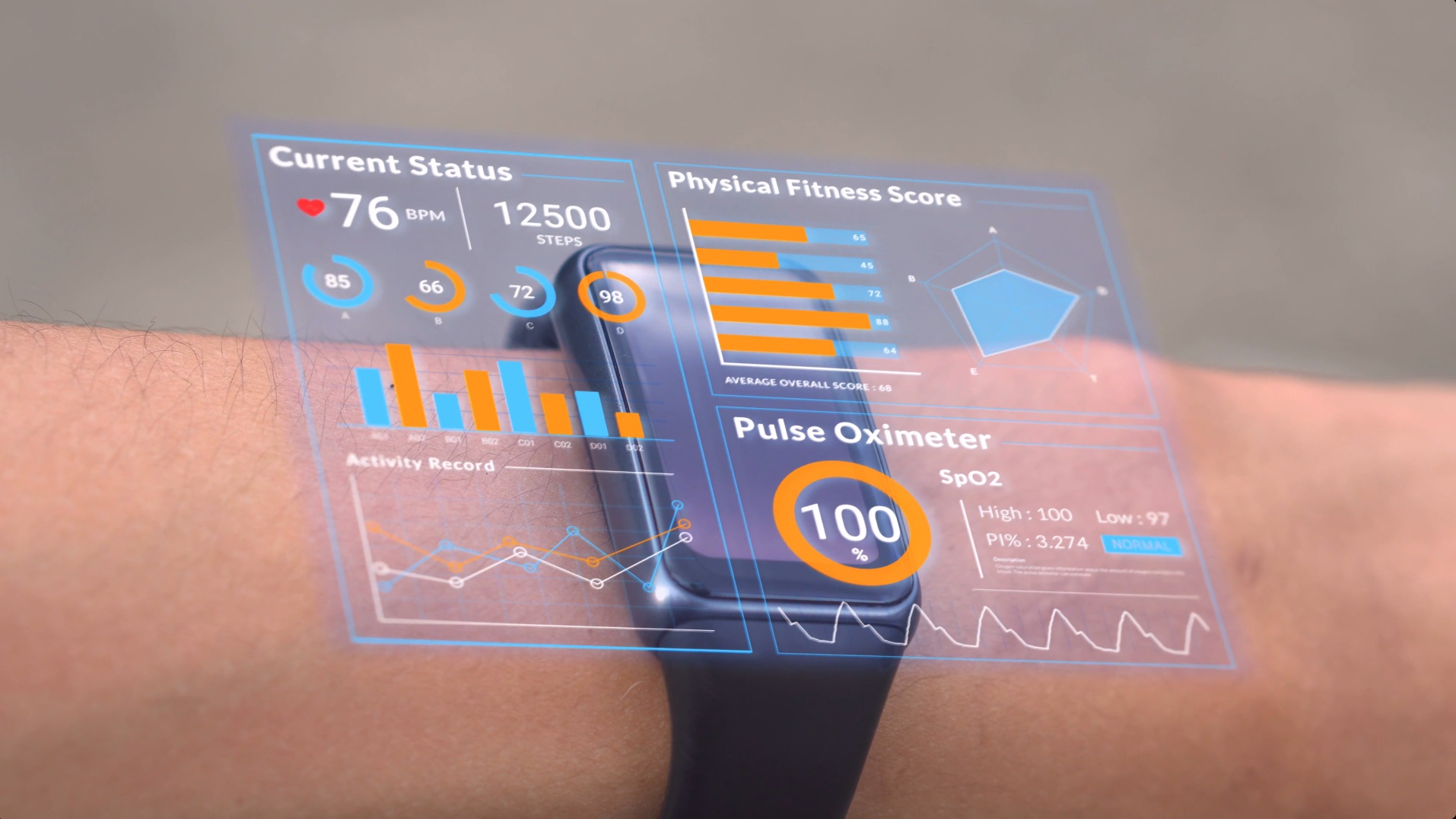

But AI leveraged in real-time gameplay will likely be latency-sensitive. Use cases like AI NPCs (or agents), AI-aided UGC, or chat-enabled in-game guides will benefit from local NPU inference on devices where latency is reduced and game developers can maintain the on-device compute economics they currently thrive on (where local compute is essentially free).

Takeaway: As AI models continue to evolve, so does the hardware and architecture of processors that support them. Progress and development on both the hardware and software side of AI training and inference continue to provide step-change advancements in AIs capabilities and accessibility to end-users. Specialized processors, like NPUs, are more efficient at certain tasks but are also less adaptable to software and model changes in the future. Regardless, companies like Apple, Google, and others are pushing ahead with on-device NPU offerings that open up the market to local inference. For gaming, and many other latency-sensitive areas, this enables a more viable economic model to bring AI use cases to players and users.

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)